|

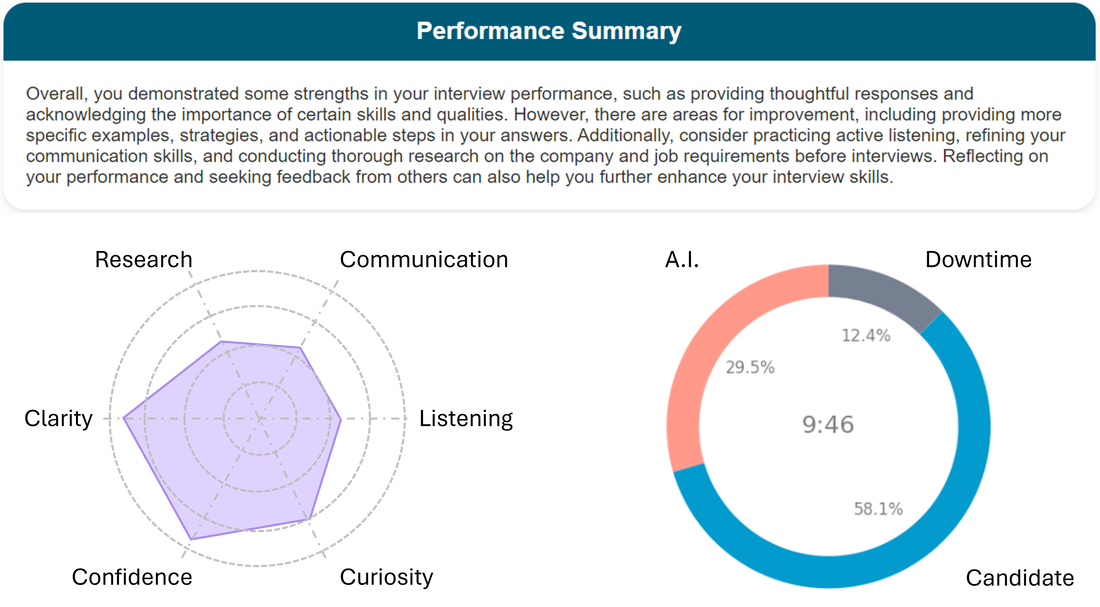

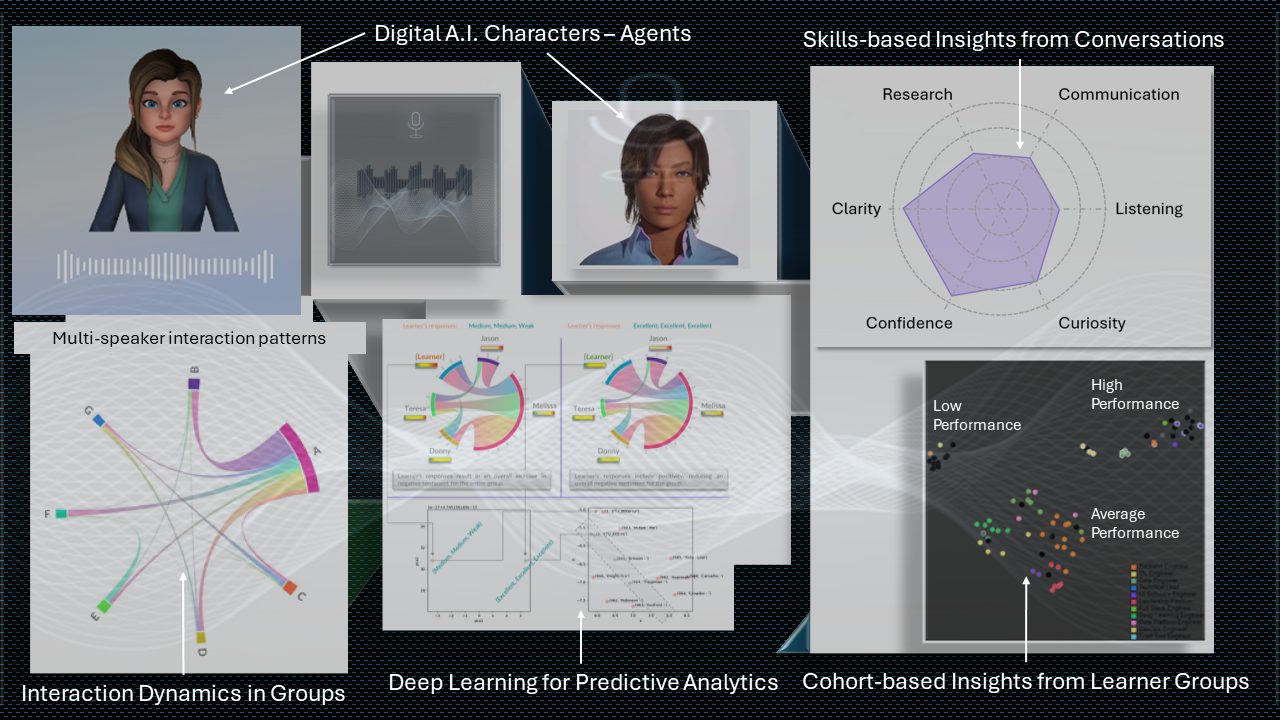

In my first blog post about Generative AI and Large Language Models (LLMs), I explained their inner workings and why they are well-suited for adoption in both academic and business contexts. I’m building on this introductory blog by diving deeper into their inner workings in specific contexts. In this second blog, I will focus on the characteristics of LLMs that make them well-suited to help with career readiness preparation, from the grassroots stage (high schools, colleges), to later in your career, when you’re ready to land your dream role at your favorite company. We’ve already seen that Large language models (LLMs) are revolutionizing various fields, including interview coaching to find your first job, college admissions preparation, and preparing for job interviews while navigating various careers (experience a demo). Their unique properties enable personalized guidance based on individual performance, making them an invaluable tool in refining the human skills needed to be successful in any career. Let’s look at some of these properties in more detail and explore the science behind “why” LLMs are so well-suited to providing personalized guidance, and what constraints must be put in place to ensure a reliable output from these models. Advanced Natural Language Understanding LLMs possess advanced natural language understanding capabilities, allowing them to accurately interpret interview responses. They can comprehend nuances in language, identifying strengths and weaknesses in communication skills. The best reference for Large Language Models (LLMs) possessing advanced natural language understanding capabilities to accurately interpret interview responses and identify strengths and weaknesses in communication skills can be found in the paper "Improving Language Understanding by Generative Pre-Training" by Radford and Narasimhan. This paper introduces the popular decoder-style architecture used in LLMs, focusing on pretraining via next-word prediction, which enables these models to comprehend nuances in language and exhibit advanced natural language understanding capabilities. To take advantage of this capability, it is important to provide information and context to the A.I. model that are specific to your needs. There are several ways to perform this task, ranging from few-shot learning to fine-tuning, the scope of which is beyond the scope of this blog. Courses such as these from DeepLearning.ai can be extremely useful if you are new to this field. Adaptive Feedback Mechanisms These models utilize adaptive feedback mechanisms to tailor coaching based on individual needs. By analyzing interview responses, LLMs can provide targeted feedback, focusing on areas requiring improvement while reinforcing strengths. To better understand the science of LLMs and their adaptive feedback mechanisms to tailor coaching based on individual needs, you can refer to the following articles:

Attention Mechanisms & Transfer Learning LLMs leverage vast datasets to generate data-driven insights into interview performance. By comparing responses to successful interview patterns, they can offer actionable advice to enhance performance. One such dataset is the MIT Interview Dataset, which comprises 138 audio-visual recordings of mock interviews with internship-seeking students from the Massachusetts Institute of Technology (MIT). This dataset was used to predict hiring decisions and other interview-specific traits by extracting features related to non-verbal behavioral cues, linguistic skills, speaking rates, facial expressions, and head gestures. Modern day LLMs have some unique properties that allow them to exhibit similar capabilities with contextual information and new data, even if that data is not as comprehensive as the dataset described above. I briefly highlight two of these properties below:

Real-Time Computation One of the key strengths of LLMs is their ability to provide real-time analysis given structured data. This instantaneous feedback enables candidates to adjust their approach on the fly, improving their performance as they go. The architecture of LLMs, which are essentially complex neural networks, is optimized for efficient computation. These neural networks have already learnt a mapping between the input and output based on billions of parameters and are utilizing these learnt weights to perform mathematical computations at a rapid pace. This allows them to analyze conversational information such as interview responses in real-time, providing immediate feedback to users during these interactive sessions. Based on analysis and feedback, LLMs can help create personalized learning paths for career readiness preparation. They can even be tuned to recommend specific resources or exercises tailored to target areas for improvement, thereby maximizing their effectiveness. How can Generative AI help my organization with career readiness? In conclusion, the properties of large language models make them exceptionally well-suited for providing personalized career readiness preparation. Their advanced natural language understanding, adaptive learning mechanisms, data-driven insights and real-time computation capabilities offer invaluable support to individuals navigating the complexities of the pursuing their career goals. As LLMs continue to evolve, they hold the potential to revolutionize career development, empowering individuals to achieve their professional goals with confidence and competence. The reliability and consistency of the output from these LLMs is however heavily dependent on the quality of your input data and the precise definition of context that you can provide. At Relativ, we help organizations gather input data and create guidelines with sufficient fidelity for their A.I. models to infer “what good looks like”. We help them experiment with their own data and understand how an LLM works with different contextual information, so they can expand these capabilities and begin to measure various skills that individuals exhibit during their conversational exchanges. When tailored to specific job descriptions, these customized A.I. models can give end users a competitive advantage by not only identifying the skills they require, but also helping them improve their performance on those skills through personalized feedback. Head over to relativ.ai or reach out to us to learn how we can help you deploy your own AI models, infused with psychology, and linguistics, to empower your organization and end users with the career readiness skills they require to meet the challenges of the future of work.

0 Comments

With the explosion of Generative Artificial Intelligence (GenAI), and the widespread adoption of Large Language Models (LLMs), there are widespread opportunities for organizations and individuals to augment their existing functions with AI. As with the introduction of any new technology, I have seen varied opinions from people ranging from being fascinated, to resisting change, and even attempting to disprove that the technology is actually beneficial, by trying every possible way to make the technology fail. Worse, we are constantly highlighting one-sided dangers of the technology, with prejudice and bias, without taking the time to understand why or how certain technologies can be beneficial, what its strengths are, and what its limitations may be. Public perception is often the biggest threat to innovation. Like many people, I have spent a lot of time experimenting with AI but haven't found a compelling explanation of "why" AI can help drive business outcomes. One of the key aspects that I've been focused on is the use of my own curated and contextual data, which has positively disrupted the outputs from these fantastic AI models. As I continue my learning journey through my career and through life, I’ve decided to create a short series of blogs about my findings and my experiments in the space of GenAI and LLMs. While it will primarily serve as a reminder of my own career pathway, I intend to make this information relevant and helpful to anyone taking the time to read it. Throughout my blog posts, I will include relevant resources to where a reader can find further information, should they wish to dive deeper into a certain topic. I hope you enjoy reading this series, as much as I enjoyed writing it. In my first blog post about this exciting area of research and application, I will aim to explain the inner workings of Large Language Models, and some of their characteristics, in a way that allows us to use a data-driven approach to decision making. The hope is that this information will allow the adoption of these AI models in our workplaces (and our personal lives), to augment our efforts, and help us be more productive, and efficient in the long run.

What are Large Language Models? Almost everyone that has heard of Artificial intelligence, has also probably heard of the term “Neural Network”. They are a specific architecture which allows computers to learn the relationship between input samples and output samples, by mimicking how neurons in the brain signal each other. The first Neural Network, called a Perceptron, developed in 1957, had one layer of neurons with weights and thresholds that could be adjusted in between the inputs and the outputs. A fantastic introduction to this topic can be found here. Large Language Models (LLMs) are a type of Neural Network. In contrast to the Perceptron from 1957, some of these LLMs are infinitely more complex, with nearly a hundred layers and 175 billion or more neurons. What do Large Language Models do? One of the first practical applications of neural networks was to recognize binary patterns. Given a series of streaming bits (1s and 0s) as input, the network was designed to predict the next bit in the sequence (output). Similarly, in very simple terms, Large Language Models can predict the next word given a sequence of words as input. If that is all that they can do, why are they so powerful and appear incredibly intelligent? The scope of this goes well beyond a blog post, but here is an incredible resource that will help you understand the inner workings of large language models. Characteristics of Large Language Models In the final sub section of this blog, I will attempt to highlight some properties or characteristics of Large Language Models. I will refer to these characteristics in various future blog posts, so the utility of these properties and their application areas can be better understood. It is these characteristics and properties that contribute to the ability of LLMs to appear to understand and generate human-like language.

What can Large Language Models help with? At Relativ, we have been experimenting with Deep Learning, Psychology, Linguistics, and Large Language Models, by qualitatively assessing the generated text across thousands of interactions, and continuously instruction-tuning these models to quantize their output. We have learned that when LLMs are combined with proprietary algorithms and curated data, their output can be transformative and insightful. When used in thoughtful conjunction around the end-user experience, as well as the intended business or academic outcome, we are starting to see some very promising results. We are already beta-testing these models in recruiting, sales, learning and development, retrospectives, and career readiness where early adopters are reaping the rewards of experimenting early, and gaining a competitive advantage from the learning that occurs. In the next series of blogs, I will attempt to describe how Relativ's models are being used in each of the above fields, and why they can be a game changer in the long run. I will refer to the characteristics of LLMs highlighted in this blog entry, for continuity and chain-of-thought (pun-intended), throughout this series. In the meantime, head over to relativ.ai or reach out to us to learn how we can help you deploy your own AI models, infused with psychology, and linguistics, to help you drive business outcomes. |

AboutArjun is an entrepreneur, technologist, and researcher, working at the intersection of machine learning, robotics, human psychology, and learning sciences. His passion lies in combining technological advancements in remote-operation, virtual reality, and control system theory to create high-impact products and applications. Archives

April 2025

Categories

All

|

RSS Feed

RSS Feed